I’ve always been fascinated by the kinds of thoughts we don’t act on. In psychiatry, they shape regret, resilience, and rumination. In neuroscience, they reveal a deep truth about how the brain handles uncertainty. Every morning when I’m running late, I catch myself thinking: “If only I’d left five minutes earlier.” It’s a fleeting thought, but it represents one of the most computationally sophisticated processes our brains perform: imagining alternative realities that never happened.

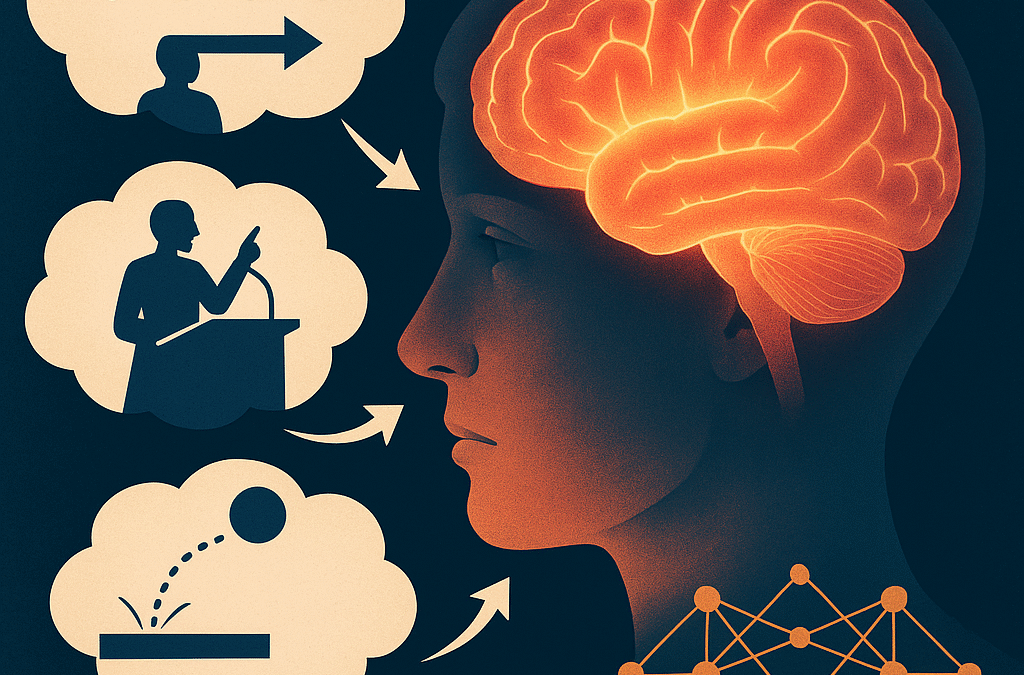

Every day, your brain performs millions of “what if” calculations without you even noticing. What if I had taken the other route to work? What if I hadn’t said that in the meeting? What if the ball bounces differently than expected? This capacity for counterfactual reasoning, imagining alternative realities that never actually occurred, represents one of the most sophisticated computational achievements of biological intelligence.

A groundbreaking new study published in Nature Human Behaviour by Ramadan, Tang, Watters, and Jazayeri has shed new light on why humans rely on these mentally expensive “what if” simulations, revealing computational constraints that force our brains into remarkably clever problem-solving strategies. Their findings illuminate human cognition and change how we understand intelligence itself.

The Computational Mystery: Why Do We Think in “What Ifs”?

From a purely computational standpoint, counterfactual reasoning seems inefficient. When facing complex decisions, optimal algorithms should simply compute the joint probability of all possible outcomes and pick the best option. So why do humans constantly engage in the seemingly wasteful exercise of imagining alternatives?

The answer, as Ramadan and colleagues discovered, lies in the fundamental constraints that shape how our brains process information. Using an ingenious H-maze task where participants had to track an invisible ball through branching pathways, they uncovered three critical computational bottlenecks that force human cognition into hierarchical and counterfactual strategies:

1. Parallel Processing Bottleneck: Our brains cannot track all possible trajectories simultaneously. We must break complex problems into sequential, hierarchical steps.

2. Counterfactual Processing Noise: When we engage in “what if” thinking, our working memory introduces noise that degrades the fidelity of these mental simulations.

3. Rational Resource Allocation: Humans adaptively adjust their reliance on counterfactuals based on how much these mental simulations cost them.

Very Clever Use of Recurrent Neural Networks in Modeling Features of the Human Mind

The research reveals profound insights about intelligence itself. When Ramadan et al. created artificial neural networks and subjected them to the same computational constraints humans face, something remarkable happened: only the networks constrained by all three bottlenecks reproduced human-like behavior.

This finding demonstrates the power of using recurrent neural networks to model human cognition. By constraining artificial networks with the same limitations that shape human thinking, Ramadan et al. created systems that behave remarkably like people. The key insight is that RNNs can capture mental processes like hierarchical and counterfactual reasoning when they face the same computational bottlenecks humans do.

Neural Architecture of Counterfactual Reasoning

The neural implementation of counterfactual reasoning tells a more complex story beyond frontal control. Van Hoeck and colleagues’ landmark fMRI study revealed that counterfactual thinking engages a distributed network that hijacks the brain’s episodic memory system.

When participants imagined “upward counterfactuals” (better outcomes for negative past events), their brains activated the same core memory network used for remembering the past and imagining the future: hippocampus, posterior cingulate, inferior parietal lobule, lateral temporal cortices, and medial prefrontal cortex.

What makes counterfactual reasoning computationally expensive becomes clear in this neural architecture. Counterfactual thinking recruited these memory regions more extensively than episodic past or future thinking, and additionally engaged bilateral inferior parietal lobe and posterior medial frontal cortex.

The extra brain activity reflects just how demanding this kind of mental juggling really is: counterfactual reasoning requires simultaneously maintaining factual and contrafactual representations while actively inhibiting the dominant factual reality.

The brain has evolved specialized circuitry for tracking “what might have been.” Boorman and colleagues discovered that lateral frontopolar cortex, dorsomedial frontal cortex, and posteromedial cortex form a dedicated network for encoding counterfactual choice values: tracking not just what happened, but whether alternative options might be worth choosing in the future.

This network operates in parallel to the ventromedial prefrontal system that tracks the value of chosen options, suggesting that the brain maintains separate computational channels for factual and counterfactual value processing.

Perhaps most remarkably, recent work has shown that counterfactual information fundamentally transforms how the brain codes value itself. When counterfactual outcomes are available, medial prefrontal and cingulate cortex shift from absolute to relative value coding.

Think of it this way: losing $10 feels terrible if you could have won $50, but feels great if you could have lost $100. The same neural outcome is processed as positive in a loss context (absence of punishment) but negative in a gain context (absence of reward).

This neural flexibility mirrors the adaptive computational strategies revealed in behavioral studies: the brain dynamically reconfigures its representational schemes based on available information and processing constraints.

These findings illuminate why counterfactual reasoning is both computationally expensive and evolutionarily preserved. The enhanced neural demands reflect genuine computational costs: maintaining multiple alternative representations, binding novel scenario elements, and managing conflict between factual and counterfactual worlds. Yet this system enables the kind of flexible, context-sensitive reasoning that allows humans to learn from paths not taken and adapt behavior based on imagined alternatives.

The Bounded Rationality Renaissance

These discoveries are part of a broader renaissance in understanding bounded rationality, the idea that intelligent behavior emerges not from perfect optimization, but from smart adaptations to computational limitations.

Herbert Simon’s revolutionary concept of bounded rationality challenged the assumptions of perfect rationality in classical economic theory, proposing instead that individuals “satisfice” (seeking good enough solutions rather than optimal ones) due to limitations in computation, time, information, and cognitive resources.

Simon’s work recognized that “perfectly rational decisions are often not feasible in practice because of the intractability of natural decision problems and the finite computational resources available for making them.” This insight has profound implications for both understanding human cognition and designing artificial intelligence systems.

The Bigger Picture

The Ramadan study reveals something profound: the cognitive strategies we think of as distinct (hierarchical reasoning, counterfactual thinking, simple optimization) actually lie along a continuum. Human intelligence dynamically shifts between these approaches based on available mental resources and task demands.

This has implications beyond neuroscience. If counterfactual reasoning emerges from computational constraints rather than being hardwired, it suggests these “what if” processes might be fundamental to any sufficiently complex intelligence, biological or artificial.

Clinical Frontiers: When Counterfactuals Break Down

From a clinical perspective, this research offers new windows into psychiatric and neurological conditions. Counterfactual reasoning depends on integrative networks for affective processing, mental simulation, and cognitive control. These are systems that are systematically altered in psychiatric illness and neurological disease.

Consider a patient with OCD who gets trapped in endless loops of “what if I didn’t check the door?” or someone with depression whose counterfactual thinking spirals into “if only I were different, everything would be better.” Understanding the computational basis of these patterns could lead to more targeted therapeutic approaches.

Patients with schizophrenia show specific deficits in counterfactual reasoning when complex non-factual elements are needed to understand social environments. By mapping how these computational processes break down, we’re gaining new tools for both diagnosis and treatment.

The Bottom Line: Constraints as Features

The story of counterfactual reasoning is a story about the power of constraints. What initially appears to be a computational limitation (our inability to process all information in parallel) turns out to be the very foundation of human cognitive flexibility.

The human brain’s “what if” engine represents an elegant solution that emerges from the interplay between computational constraints and adaptive intelligence. As we stand on the brink of artificial general intelligence, perhaps the secret lies not in building systems that can process everything at once, but systems that can gracefully adapt to the fundamental constraints that shape all intelligence.

The future of AI may not lie in eliminating human limitations, but in understanding why those limitations exist and what remarkable capabilities they make possible.

This convergence of neuroscience, cognitive science, and AI represents a fundamental shift in how we understand intelligence. Rather than seeing computational constraints as problems to solve, we’re beginning to recognize them as the very features that make flexible, adaptive intelligence possible. The brain’s “what if” engine may be a blueprint for the next generation of truly intelligent machines.

The next time you wonder what might have been, remember: that question may be the very core of what makes you human.

Bibliography

Boorman, E. D., Behrens, T. E., & Rushworth, M. F. (2011). Counterfactual choice and learning in a neural network centered on human lateral frontopolar cortex. PLoS Biology, 9(6), e1001093.

Pischedda, D., Palminteri, S., & Coricelli, G. (2020). The effect of counterfactual information on outcome value coding in medial prefrontal and cingulate cortex: From an absolute to a relative neural code. Journal of Neuroscience, 40(16), 3268-3277.

Ramadan, M., Tang, C., Watters, N., & Jazayeri, M. (2025). Computational basis of hierarchical and counterfactual information processing. Nature Human Behaviour. doi:10.1038/s41562-025-02232-3.

Simon, H. A. (1955). A behavioral model of rational choice. Quarterly Journal of Economics, 69(1), 99-118.

Van Hoeck, N., Ma, N., Ampe, L., Baetens, K., Vandekerckhove, M., & Van Overwalle, F. (2013). Counterfactual thinking: An fMRI study on changing the past for a better future. Social Cognitive and Affective Neuroscience, 8(5), 556-564.

Van Hoeck, N., Watson, P. D., & Barbey, A. K. (2015). Cognitive neuroscience of human counterfactual reasoning. Frontiers in Human Neuroscience, 9, 420.

Zador, A., Escola, S., Richards, B., et al. (2023). Catalyzing next-generation Artificial Intelligence through NeuroAI. Nature Communications, 14, 1597.